What is Deepfake?

The term "Deepfake" is a combination of "deep learning" and "imitation". Deep learning algorithms, trained on large datasets, can realistically imitate human faces, voices, and movements, and are used to alter photos, videos, or audio using artificial intelligence techniques.

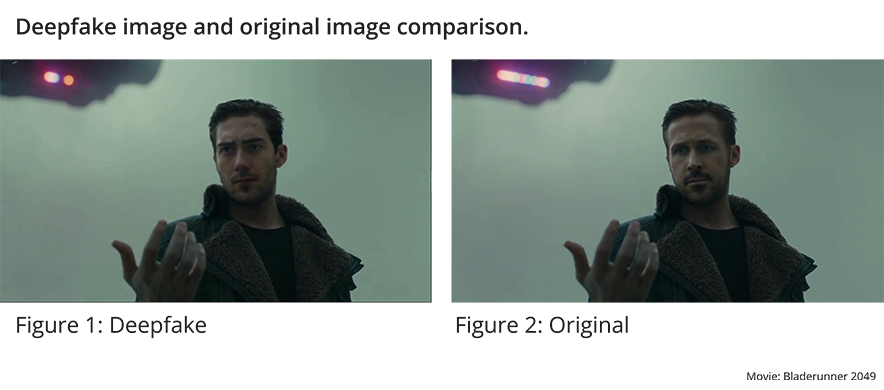

The term Deepfake first emerged in 2017, coined by a Reddit user who used AI-powered software to alter actors' faces. Deepfakes are particularly created using a method of AI known as Generative Adversarial Networks (GANs), developed in 2014. This technology involves two opposing networks (a generator and a discriminator), enabling the creation of highly realistic fake images.

What are the Uses and Applications of Deepfake Technology?

Deepfake technology can be used for delivering messages from important figures in emergency and crisis communication, creating realistic effects in the entertainment industry, and reenacting historical events for educational purposes. It offers realistic speech examples for language learning tools. The most concerning use of Deepfake technology is for malicious intents, especially in creating manipulative or misleading content. The production of fake news and potential uses that undermine public trust are significant issues with this technology.

Consequently, Deepfake technology raises ethical concerns and security issues. The technique known as "face swapping" can replace one person's face with another. Deepfake videos can maliciously alter the statements of influential individuals, creating false content that can disrupt societal trust. Such technologies can undermine the reliability and perception of reality, leading to the spread of fake news and potentially severe social impacts.

For example, as reported by The Wall Street Journal in 2019, a UK-based energy company's CEO was defrauded using a Deepfake phishing method, resulting in the transfer of $243,000 to the criminals' account. Fraudsters used AI-based voice imitation software to impersonate the executive of the parent company, convincing the CEO that he was speaking with a high-level executive.

European Union's Regulations on Deepfake

Regulating Deepfake technology is a challenging process. The EU's Artificial Intelligence Act mandates the disclosure of sources for Deepfake content in member countries. According to the General Data Protection Regulation (GDPR), individuals' consent is required for the use of their personal data in Deepfakes. The Digital Services Act (DSA) allows users to report illegal content, but the definition of 'illegal' remains vague. Online platforms, while developing proactive policies against Deepfakes, face legal and technological challenges in regulating this technology.

How Can We Detect Deepfake?

Deepfake detection aims to create a defense mechanism against the development and spread of fake content. These technologies analyze details such as faces, movements, and eye movements in videos. AI and machine learning (ML) models learn to distinguish between real and fake content by analyzing large datasets. Deepfake detection can be conducted by anyone through open-source projects written in Python and using various deep learning libraries like PyTorch, ResNet. Here are some methods used for Deepfake detection:

- Face Forensics: Deepfake videos often show inconsistencies with faces in the original videos. This research field aims to identify differences between original and manipulated faces by analyzing geometric and physical features of faces. "Face Forensics" is typically used in academic research.

- Frame Analysis: Deepfake videos often contain rapidly changing facial expressions and movements. The realism of facial expressions or eye movements may be challenging to replicate, making irregular or unnatural appearances notable.

- Movement Analysis: Deepfake videos may show inconsistencies with the original video's movement style and rhythm. Algorithms focusing on movement analysis are designed to model natural human movements and compare them with those in Deepfake videos.

- Eye Analysis: Deepfake videos may fail to replicate natural behaviors like blinking. Irregular blinking, emotional expression mismatches, or unexpectedly prolonged open eyes can indicate Deepfake content. Therefore, algorithms that analyze eye movements are among the methods used for deepfake detection.

- Voice Analysis: Deepfake technology can manipulate not only video but also audio content. Voice analysis attempts to determine the authenticity of a voice recording, checking for robotic tones.

Deepfake detection typically involves a combination of these methods and continues to evolve with advancing technology. However, detection is not always entirely accurate and remains an area requiring continual updates.

What Should Be Done Against the Threats of Deepfake Technology?

Technology itself is needed to eliminate the risks and threats brought by technology. At this point, especially social media content provider platforms and cyber security organizations should take steps to develop anti-deepfake software and algorithms to prevent the use of deepfake technology. Such software and solutions are important to protect the social rights of everyone with a digital identity.

Cybersecurity companies offer various strategies and services in the fight against deepfakes. These services are generally as follows:

- Development of Tools: Using AI and machine learning algorithms to analyze videos and images for signs of manipulation.

- Analysis and Separation: Advanced tools and reference databases are used to distinguish Deepfake videos and content from original videos or photos.

- Awareness and Training: Providing information and education on Deepfake technology and its detection.

- Defense Against Cyber Attacks: Developing methods to defend against cyber attacks utilizing Deepfake. This may include real-time monitoring and alert services.

- Additional Services: Offering extra services like digital watermarking, blockchain verification, collaborations with social media platforms, and API integration.

Should you have any queries or need further details, please contact us.

Notification!